Please use your reply to this blog post to detail the following:

- Please start with a full description of the nature of your first ML project.

- What was your primary motivation to explore the project you completed?

- Share some insights into what predictions you were able to make on the dataset you used for your project.

- What new skills did you pick up from this project? For example, had you used Jupyter Notebooks before? Did you encounter any weird bugs, twists, or turns in your dataset that caused issues? How did you resolve those issues?

- What types of conclusions can you derive from the images/graphs you’ve created with your project? If you didn’t create charts or graphs and instead explored things like Markov Chains, how much work do you think you need to do to further refine your project to make its output more realistic?

- Did you create any formulas to examine or rate the data you parsed?

Take the time to look through the project posts of your classmates. You don’t have to comment on the posts of your classmates, but if you want to give them praise or just comment on their project if you found it cool, please do.

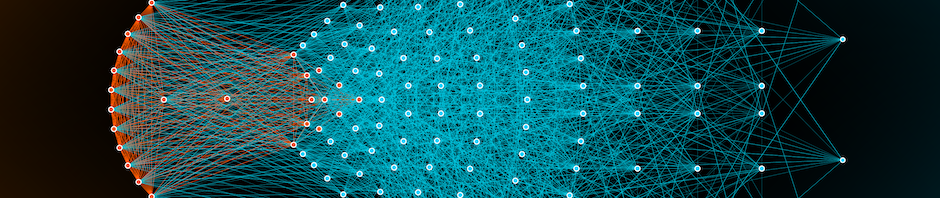

My ML project uses a simple deep learning model that is trained to play chess. It takes in a 8x8x12 array that represents the board. The first 6 8×8 arrays represent white piece locations, and the other 6 8×8 arrays represent black piece locations. The model returns an integer, which represents a move. Move are considered as categories, and moves are mapped to an integer. For example, 1833 might represent the move e2e4. The model uses two Conv2D layers, a Flatten layer, and two Dense layers. It uses the ReLU activation function to achieve non-linearity and complexity. In the final Dense layer, it uses softmax activation function to make sure the result is an integer and not a float. The model is trained on around 2.2 million positions and 22k games.

My primary motvation was because I wanted to do something game-related. I was considering Othello, but I felt that there wasn’t a lot of data for it. Chess seemed more mainstream and popular, and thus more doable.

Based on my dataset, my model should be able to look at the board and make good moves. But since there are so many variations in chess, the model would make bad predictions sometimes. Even though the loss was somewhat low, there is no inherent quantity/value of a move. For example, move integer 1834 (d6g3) was not inherently 1 more than move integer 1833 (e2e4) So, even if the model’s prediction is numerically close to the actual best move, it doesn’t translate to being a relatively good move. This most likely explains why it blunders.

I learned more about using Jupyter Notebook since I hadn’t used it before. I also encountered many errors in making the model. Initially, I did not convert moves into integers, and it caused problems. After asking ChatGPT, I learned that these models can only work with numbers and not strings. Also, after looking at other notebooks, I learned more about better structuring my own notebook. Furthermore, I encountered issues with the shape of my data and inputting it into my model. I learned that calling .shape is helpful, and I learned how to interpret the values.

Based on my graph, I concluded that my model overfitted slightly, and the accuracy peaked at around 20%. As I explained earlier, the other 80% could result in completely bad moves or maybe decent moves. From this data, I conclude that there is something fundamentally wrong with my model. The main causes are probably lack of data, simple model, or wrong idea.

I did not create any formulas to examine or rate the data I parsed. I could not think of a suitable way to quantify how good my data is. I considered how many moves were played, but I don’t know what makes a game/position inherently better than another one.

(1) My project, ML Daylist, is a tool which generates a Spotify playlist based upon previous listening data. It uses the current temperature, time of day, day of the week, and month of the year as targets in order to predict songs you might be interested in. Looking closer, the algorithm uses a random tree classifier to predict the music genres a user would most likely be listening to given the targets. It then feeds these genre predictions into a gradient boosting regressor, predicting data in the form of certain statistics, such as ‘danceability’ or ‘energy’ (these statistics are provided by the Spotify API). My algorithm then uses a least means squared function to determine which of the user’s songs are most similar to the predicted statistics and adds them to a playlist automatically. In order to make these predictions in a more cohesive manner, I also created a clustering algorithm which calculates how important each statistic is to each genre and weights them accordingly. This is done by finding the distance between each song in a genre and averaging them. Then, the algorithm takes out one statistic at a time, creating a silhouette score. The higher the silhouette score, the less important the statistic is to a genre. For example, if all songs of a ‘pop’ genre have a high danceability score, taking out that score will not impact the data as much as taking out a different statistic. Finally, in order to have the data to complete this project, I also created a file which automatically records my listening history.

(2) Spotify is a huge part of my life. I am constantly listening to music and my mood greatly impacts what type of music I prefer at a given time. I have found the Spotify ‘daylist’ to be often spectacular at predicting the mood I am in and suggesting me music to match it. I wondered if my own generated playlist, based on data Spotify might not collect (temperature being the main differentiator), could surpass these predictions.

(3) At the start, my daylist seemed to be not much more than a random mix of songs from my listening history. This is because the statistics I am predicting based upon didn’t have enough difference to sort between different genres. Often, I would get rap songs next to folk songs, which just doesn’t make sense. As I improved my algorithm, however, it became much better at clustering genres together and my daylist started to look a lot more cohesive. While it still is certainly not perfect nor better than the Spotify daylist, I do find myself using it occasionally. This fact shows the quality of my predictions better than any statistic ever could. That being said, I do have code that evaluates my predictions in a more statistical way (such as r^2 and the sort). These values are not good; however, I never felt it was important to tune these values too much as music is very subjective. (6) I did create an accuracy score to evaluate my data. This score was simply the squared difference between each target song statistic and my predicted song statistics. These differences between each statistic are added together to create the score for the target song. When I did want to view statistical data for why my predictions were not working as well as I liked, I used this accuracy score instead of r^2 as separating it into pieces gave me a better view of which statistics were important and which were not. (5) I did not create any graphs for my main project; however, I did experiment with a clustering algorithm before ultimately deciding to go with my own. In these experiments, I generated a scatterplot of song genre cluster in order to help me visualize how well the algorithm was working. This allowed me to notice that the clustering algorithm was not working as well as I wanted rather quickly and adjust accordingly.

(4) One skill that this project has really improved, perhaps ironically, is my ability to use tools like ChatGPT and Jetbrains AI effectively to learn. I have certainly tried to use AI in the past, but usually for small bugs that I just didn’t want to spend the time figuring out. For this project, I dove into a world of Machine Learning I had never touched before. As such, LLMs were useful for explaining the basics of the algorithms I was using in a way I had never encountered before. Throughout this project, I have gotten much better with prompt engineering in order to get the best result I am looking for from these LLMs. This project has also, of course, drastically increased my understanding of how Machine Learning models work. Going into this project, I sort of thought of them as a mysterious box that just sorta learned, but now I understand the effort that goes into cleaning data, tuning the models, and ultimately sorting the results.

(1) I created a program that uses a GradientBoostingClassifier to generate a type for a custom pokemon based off of other user inputted statistics.

(2) I’ve always been a huge fan of pokemon, and when I found a dataset on kaggle I was super excited to try it out.

(3) There really is no correlation in the stats, a lot of it is based on the design of the pokemon and it’s really hard to find a pattern in the statistics. If I were to do this again I would use images instead of statistics because it’s way more predictable to pick type based off of image due to things like color mattering for type.

(4) I learned a lot of python, as I haven’t used python since 9th grade intro to CS and so I had to relearn a lot of code before I could start working on the actual AI. My biggest issue in my data is I had too little data for most of my project. My original idea was more of a generative AI, where I could give it a name and it would spit out a bunch of data. My issue with this is that since there were no patterns my AI was essentially random and it was a struggle to keep my MSE under 1000. The final project is much simpler than I intended because by the time I realized I couldn’t get results from my data it was too late to change topic.

(5) I cannot derive any conclusions from my data as my model has 6% accuracy due to my lack of correlations. To make this project work, I would need to add images and image recognition to allow for more accurate type predictions as color in the image correlates strongly with type and not much else does.

(6) I did not create any formulas.

Please start with a full description of the nature of your first ML project.

My project imports a dataset with 35 columns, 32 of which describe various health metrics. It contains data on 2,149 patients. For more information about the dataset, please refer to the sources section, which is linked. My project stems from another project that trains various models on the dataset and displays each model’s accuracy and other metrics, such as f1 score. That project is also linked below. After copying the models, I discovered that the GradientBoostingClassifier was one of the highest-scoring models, so part of my project was to optimize it as best I could. I also created a Google form and had my father fill it out with his health metrics, and then I figured out what the probability is that he will eventually develop Alzheimer’s, assuming he lives to old age.

What was your primary motivation to explore the project you completed?

My primary motivation to explore this project is because my family has a history of Alzheimer’s. Both of my grandparents on my father’s side suffered from it, so there was a personal motivation to see if I could train a model that could detect Alzheimer’s based on health metrics. A secondary motivation is that I wanted a dataset that would be easy to work with, since this is my first try at AI and machine learning, and the Alzheimer’s dataset was extensive and clean.

Share some insights into what predictions you were able to make on the dataset you used for your project.

Using GradientBoostingClassifier, I was able to predict whether or not a patient had Alzheimer’s correctly about 96% of the time. After doing some hyperparameter tuning, I was able to get a perfect training accuracy but lower validation accuracy, so the model overfitted to the training set. I was not able to get a validation accuracy higher than 0.96. Then I created another dataset with my dad’s health information. There was a lot of data lost because I couldn’t get data on certain metrics, such as cholesterol levels, so the accuracy was only about 0.72 for this model. Using this new model, I was able to predict the probability my dad has Alzheimer’s.

What new skills did you pick up from this project? For example, had you used Jupyter Notebooks before? Did you encounter any weird bugs, twists, or turns in your dataset that caused issues? How did you resolve those issues?

To start, this is the first time I’ve ever coded in PyCharm. Naturally, that means I have never used Jupyter Notebooks before either.

What types of conclusions can you derive from the images/graphs you’ve created with your project? If you didn’t create charts or graphs and instead explored things like Markov Chains, how much work do you think you need to do to further refine your project to make its output more realistic?

I did not use images/graphs, and I did not explore other things either. I mainly looked at my accuracy, f1 score, and confusion matrix. The f1 score can be better than accuracy when you care more about false negatives and positives, and since those are important in healthcare, it was a helpful metric. The confusion matrix helped me see what the model got wrong during the validation, and it helped when I wanted to minimize false negatives.

Did you create any formulas to examine or rate the data you parsed?

I did not. However, the f1-score is based on a formula, but I didn’t use the formula specifically to improve my model.

1. My first machine learning project focused on developing a convolutional neural network (CNN) to detect key points on images of feet wearing flip-flops, with the goal of quantifying the toe spread by measuring the distance between the first two toes. I collected frames from walking trial videos, resized them to 512×512 pixels, and used ImageJ to scale the images for consistency. Using Label Studio, I annotated the images with two key points and processed the data into NumPy arrays for training. The CNN model, built using TensorFlow’s Keras API, employed Conv2D and MaxPooling layers to extract and downsample features, followed by Dense layers to capture complex relationships. The model predicted two key point coordinates, with Mean Squared Error (MSE) as the loss function and Adam as the optimizer. I trained the model on a dataset of 78 images, splitting the data for validation, and saw a significant improvement in MSE when increasing the dataset size. The final model was capable of predicting toe key points, allowing for future enhancements with larger datasets.

2. Diabetes can cause a loss of sensation in the lower extremities, a condition called diabetic neuropathy and quality footwear is incredibly important to prevent complications such as diabetic foot ulcers. Over a 100 million people globally have diabetic neuropathy 18 million of whom develop diabetic foot ulcers and 6 million of them will get amputations (https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7919962/). A study found that the pressure from traditional straight edged flip flop straps is responsible for as much as 15% of diabetic foot ulcers caused by footwear (https://pubmed.ncbi.nlm.nih.gov/29916424/). 5 years ago I designed a new type of flip flop strap called the rolled inner seam strap. The purpose was to reduce localized pressure and risk of abrasion on the top of the foot, specifically the diabetic foot. In areas where the only affordable footwear is flip flops and access to good healthcare is low this innovation could be pivotal. I wanted to have evidence that the rolled inner seam flip flops did in fact reduce localized pressure. So I conducted human walking trials. I placed a pressure sensor at the toehold area and had my volunteers walk in my designed flip flops and a pretty commonly worn flip flop. After analyzing my results I found that there was a statistically significant difference and the rolled inner seam reduced the pressure. Then I started thinking about the actual workings of the foot. Since I was measuring pressure at the toe hold area the distance between those two toes would play into the measurement. So I wanted to find if there was any correlation between toe spread type of flip flop and pressure. The end goal/product of this project is a software in which you can take a picture of your foot while wearing a flip flop, feed it into my model, tell it the type of flip flop you are wearing and it will tell you the estimated pressure that flip flop will have on your foot.

3. In my project, the predictions focused on identifying the key points on images of feet, specifically the coordinates of the first two toes (the big toe and the second toe), to measure toe spread. Using the convolutional neural network (CNN) I developed, I was able to accurately predict the (x, y) coordinates of these two points from the processed images. The model performed well on the test dataset, with the Mean Squared Error (MSE) dropping from 822.3 (with a smaller dataset of 30 images) to 370.3 (with 78 images). This significant improvement demonstrated that the model became more accurate with increased data, suggesting that further data collection would likely lead to even better performance. The predictions were saved as text files with (x, y) coordinates for each image, which can be used to calculate the distance between the two toes.

4. Through this project, I picked up several new skills, particularly in image preprocessing, dataset annotation, and key point detection using CNNs. One of the major skills I developed was working with Label Studio for annotating images and OpenCV for image processing. Although I had some experience with machine learning frameworks like TensorFlow, this project deepened my understanding of how to use the Keras API to build and train CNNs for specialized tasks like key point detection. I also got to work with JSON files for handling annotations and using Python’s os and json modules for file management. Additionally, working with ImageJ to ensure consistency in image scaling was new for me, and I gained more experience using tools like train_test_split from Scikit-Learn for data handling and splitting. One of the biggest challenges I faced was related to ensuring that all the images were consistently scaled since they were originally captured from different distances in walking trial videos. This led to variability in image dimensions and scaling issues when trying to map key points. To resolve this, I used the strap of my flip-flop, which had a known size, as a reference to adjust the scaling across all images, ensuring that the pixel-to-centimeter ratio was the same.

5. From the outputs of my project, I was able to draw some conclusions regarding the accuracy of the model in detecting toe spread. While I didn’t create traditional charts or graphs, the drop in Mean Squared Error (MSE) as the dataset increased from 30 to 78 images indicated that a larger dataset leads to more reliable predictions. The model’s ability to predict (x, y) coordinates for the toes allows for further analysis, such as calculating toe spread and exploring biomechanical patterns. To refine the output and make it more realistic, I would need to work on increasing the dataset size and incorporating data augmentation techniques.

6. The primary metric I used was Mean Squared Error (MSE), which is commonly used in regression tasks like key point detection to measure the average squared difference between the predicted key points and the actual annotated key points. This metric allowed me to rate the accuracy of the model’s predictions. To scale the images for consistency, I used a simple pixel-to-centimeter ratio formula, based on the known size of the flip-flop strap (1.25 cm), which ensured that the images were uniformly scaled across the dataset. While these approaches were sufficient for this phase of the project, future refinements might involve more advanced statistical formulas or biomechanical metrics to better quantify toe spread and analyze patterns in the data.

Please start with a full description of the nature of your first ML project.

My project uses a cosine similarity metric between two users’ top songs to determine songs that are most compatible between two users. There is a website where a user can login and use oauth to get spotify song data and then the model coded in python will sanitize the data and use it to get a blended list of songs. As well, the login and user data is stored securely in a custom coded database I made myself.

What was your primary motivation to explore the project you completed?

I wanted to do something music related for my project and I knew that Spotify had an API where I could retrieve data from. Because I wasn’t able to get enough data for an actual predictive model, I decided to go a different route and I decided to use a cosine similarity to determine songs that are most compatible between two users.

Share some insights into what predictions you were able to make on the dataset you used for your project.

From what I can tell, the blends that I have gotten between me and my friends have been pretty good. I’m not sure how it would work with people with vastly different music tastes to me but I’m assuming it would be at least ok.

What new skills did you pick up from this project? For example, had you used Jupyter Notebooks before? Did you encounter any weird bugs, twists, or turns in your dataset that caused issues? How did you resolve those issues?

I learned how to make and interact with an SQL database and figured out how to do an Oracle Autonomous DB using Python. As well, I was able to learn how to make API calls to Spotify using Spotipy. Lastly, I learned how to preprocess data and use cosine similarity to rank similarity between different songs. Some weird bugs I encountered were Spotipy caching Spotify tokens through a cache file which didn’t allow me to access other Spotify accounts’ data because I was only using the cached data of my own. A solution to this was deleting the .cache file every time I tried to get data from the API but in the future, I want to find a better solution to this issue.

What types of conclusions can you derive from the images/graphs you’ve created with your project? If you didn’t create charts or graphs and instead explored things like Markov Chains, how much work do you think you need to do to further refine your project to make its output more realistic?

I explored the similarity scores of the different songs. I realized that songs that had the same artist didn’t really have very high cosine similarities. As well, songs with the same genre didn’t have as high similarity scores either because genre was not a thing that was included in the data for Spotify songs. In the future, I would want to look into incorporating something like Ryan’s genre system that he used for his project or at least including the artist genres.

Did you create any formulas to examine or rate the data you parsed?

I didn’t create any formulas because the amount of data was very little. All the preprocessing and using the data in the model only took a few seconds at most (unless something went wrong).

This project is about creating a model called NBA Statistics Prediction Model. This model uses machine learning through linear regression to predict the performance of NBA teams based on different factors like rebounds, turnovers, assists, etc. It uses linear regression models to predict these statistics for the home team in a game. The model would take selected factors that would be used in conjunction to predict that specific statistic. It’d essentially create a linear regression line using multiple variables where each of the previous factors would be a “x” variable and then the model predicts a coefficient for it, which would in the end produce the most accurate prediction for the overall statistic. The user inputs a home team, away team, and the date of the game, along with the season and then the model predicts the number of points as well as the predicted statistics for the various factors.

I wanted to do something sports related because I’ve done statistical analysis beforehand on scientific data. I was going to do a project on image recognition for volleyball but I didn’t think I had the time or the computer power for that model. Also, in volleyball, we talk a lot about how certain statistics can predict outcomes (hitting and passing percentages), and I wanted to see if there was a similar factor for NBA games. I also wanted to do something related to data analysis/statistical predictions because I think this would be the most relevant in my future programming projects.

I was able to predict the number of points scored by the home and away team as well as the number of rebounds, turnovers, assists, free throws made, field goals made, and blocks by the home team. I did so by using the columns that were in the dataset and creating linear regression models for each of them (used a quarter as my test size). After I made my predictions, I also found the accuracy of the models for each statistics through the r2 values, mean absolute error, and more. We can conclude that our predictions for points, rebounds, and field goals are extremely accurate as their r2 values were 0.961263, 1.00000, and 0.993272, respectively. This makes sense because we have the strongest predictors for these statistics available in our data. However, the other statistics all had r2 values below 0.5. This can be associated with these statistics not having the optimal variables (would have liked more individual player statistics or offense/defense rating). I was unable to accurately predict the statistics that were more associated with defense and the opponents’ statistics as well as the win percentage because of the lacking statistics.

I had used Jupyter Notebooks before but only for simple math/statistical calculations (column/data analysis). I had not used Jupyter Notebooks to this extent before for machine learning. I also had never written machine learning code independently before so learning how to write the models for my linear regression lines was new as well as the machine learning process (cleaning data, testing, etc.). For one, I wasn’t aware that I had to split my data into test and training sets and had to do further research on the different machine learning models for statistical predictions and their pros/cons. I ran into issues trying to find the game on a specific date where the date wouldn’t be found in my dataset even though when I manually checked the dataset, the game was there. I solved this issue by using pd.to_datetime(game_date) in the beginning but this was found to be inconsistent for some reason, so I asked Gemini which provided an additional line of code which solved my bug. I also didn’t expect to be unable to calculate the point differential, offensive rating, and defensive rating because I wanted to calculate the winning percentage in the beginning. This caused my model to focus on statistics predictions.

I didn’t have images/graphs, but I had calculated other statistics for model accuracy. I found that the predictions for points, rebounds, and field goal were the most accurate as they had r^2 values of 0.961263, 1.00000, and 0.993272, respectively. However, the rest of my statistics had r^2 values below 0.5 This indicates that though I have certain factors that are strong predictors for a statistic, I am also lacking data for all of my statistics to be accurately predicted. For my output to be more realistic I would need more data for a larger variety of statistics like offense/defense rating, point attempts, point differential, and more. More games as well as running the model for longer periods of time to adapt its linear regression line would also make the output more realistic.

I didn’t personally create any new formula to examine/rate the data. I wrote a formula for the turnover percentage but that was found on the internet (universal turnover percentage equation). The win percentage was also written, and I asked for help from ChatGPT in order to create that statistic, which would later on help predict some of the statistics.

1. My machine learning project is detecting different typefaces given different fonts. It uses convolutional neural networks to for image classification. The dataset I got from kaggle where it had 48 different types of distinct fonts, and I constructed the model so that it would ultimately flag one of the fonts as the most probable font. I first started off with learning what convolutional neural networks were and then I started to tailor specifically my model to the data set I was working with. The model would accept 64, 64,3 images and traverse them over with 3,3 convolution matrix, followed by an Average Pooling layer. I chose Average pooling over max pooling because font detection would usually have dark letters over white paper, and average pooling would account for the darker features with a lighter background better than a max pooling. I would simply the image for the models using two more of each of Average Pooling and convolution, and finally followed by a flatten layer and two fully connected layers. Coming out of the flatten layer, which would fix the two dimensional array into a one dimension array, hence, flattening it, each pixel would have a weight term and the next layer would consist of adding up all the pixel value multiplied by the weight and adding the bias term. The model would ultimately classify the image into one of the fourty eight labels. FInally, to add a practical layer to the project, I used openCV to connect my trained model to the webcam, and capture the image in the webcam to make predictions real time.

2. I had read an article about AI’s impact on identification fraud, specifically signatures on official documents. Making sure signatures match an individual’s past signatures and therefore serve as a reliable source for identification is becoming more and more crucial in an age where AI can replicate pretty much any human behavior. Sort of like a captCha but for actual individuals. Inspired by this, I wanted to make an AI that could detect computer generated text, not in a logical sense but visually.

3. M y first model was a bit overfitting because, for I think, two reasons. One reason is because all of the dataset images were in the same format without much variance. The second reason I think was because the model accepted images at such a low resolution. Some of the fonts were really difficult to distinguish even with the human eye. For example, georgia, garamond, and california FB typefaces look pretty much identical. Simplifying already very similar images at lower resolution, I believed was the reason my model was performing bad. I improved the model by having the model accept more high resolution images of 64, 64. Later I realized that for tensorflow, creating the training data generator could make variance in the data by rotating, zooming in or out on the image. I also think I used too little epochs to train for a training set with more than 100,000 images, but I have no evidence to back this up. This is when my model started to perform comparatively better with non-training datasets and I was getting the results. One thing I can’t figure out why is that the model tends to have high accuracy amongst similar fonts(for the human eye) but has lower accuracy with fonts that look completely different. For example, assuming it makes an inaccurate prediction, it would look at Comic sans, a fairly distinct typeface, as a font that looks completely different.

4. Aside from getting more familiar with jupyter notebooks, which I have realized were incredibly useful for these kinds of projects, I learned to get better at simply taking a stab into something that I have no idea of doing. I had minimal python experience prior to this class but I learned to take advantage of flint, youtube, and stack overflow, and not necessarily knowing every answer but knowing how to find those answers. It was truly remarkable how accessible answers were with generative ai and internet. Another skillset I learned was searching up APIs to read what certain classes and methods can do. I tried not to take the easy way out where flint would explain the code for me, but I tried to read some tensorflow keras apis myself to experiment what they could do. Some problems I encountered were data preprocessing. Working with such a large dataset, I didn’t know how to organize them into different groups of training and validation in a memory efficient way. Code I found online had the dataset manually sliced by indexing, which I incorporated into my project. Overall, being memory efficient and with the device was a challenge for when I was making my second model as well.

5. I didn’t make a graph in particular just yet, but one thing I can do it start training my data on images of different resolutions and randomizing the inputs such as text size. Diversifying the training data set could improve my model and not be as overfitting as it is right now, where it predicts my face as the baskerville font with 60% accuracy.

6. I incorporated a confidence value for every prediction the model would display real time by having a softmax activation function which produces a distirbution of possible predicted classes especially used in multilabel classification, with 0 being the model not being very ‘confident’ and 1 being absolutely confident, meaning the softmax function has 100% of its probability on a single font. However, I also found that this doesn’t necessarily mean the model is accurate.

I created a football drive simulator by first predicting the yards based on a weighted random number and then factoring in the scenario’s different factors. These factors include the play call which was determined by a weighted random classifier based on the same other factors including time, distance, and down. This was then put together to create a complete drive that would change every single time.

I have always been fascinated by the winning percentages shown on ESPN, so I wanted to recreate a similar model. As such I worked on making a random realistic football drive that was reliant on the offense and defense prowess.

While my project did not make predictions in this way, something that surprised me was how often teams scored touchdowns. Also, I do not know if this was just a failure of my model, but when looking at the plays the classifier called a lot more run plays than I was expecting based on watching the games and the new “Air Raid” offenses.

I had never used Jupyter before and learned how to use it more, though I did not really use it and only used it as a final way to run my project. I also learned and experienced how it can be beneficial to make different models instead of having the item just be a variable. I also learned the importance of certain datasets, because I wanted to add certain parameters, but my data set did not have some of those components such as score, which made it impossible to include such information.

I think the main way I can make things more realistic is just to create a lot more different models to define different parts of the game such as turnovers and the team’s willingness to go for it on fourth down. This would add more personality to the team and make the simulation more accurate and realistic. Once I completed this I would want to create a way to simulate the game multiple times to try to understand the percentages of one team winning over another. I might also try to change the way I calculate yards to make for larger ranges of possibilities and more reflect on the actual game of football. I might also try to pass another season of football through the project to see how the game and teams have evolved over the years.

No, I did not test my results much if at all during the project, which may have led to some of the inaccuracies, but also when I did try to test the classifier, it was hard, because there is so much chance involved that it is hard to predict a specific outcome reliable, which is why my accuracy at the time for the classifier was around 70%.

Please start with a full description of the nature of your first ML project.

My project was a F1 race position prediction algorithm. It predicted the outcome of a race given the driver, constructor of the car, and qualifying position. I used a Kaggle dataset of all races from 1950 onwards, but only used data from 2000s onwards. I rated and based the outcome on driver experience, reliability, form, constructor form, and qualifying position.

What was your primary motivation to explore the project you completed?

I really like formula 1, I think that I wanted to explore this project because I had never done a text prediction regression algorithm like this. I had done computer vision and CNNs in the past so I wanted to explore something new.

Share some insights into what predictions you were able to make on the dataset you used for your project.

I was able to predict predicted race position on the dataset that I had. However, I feel like I could have gone deeper. I could have used more input variables such as lap times in order to find the standard deviation of lap times each driver had. If given more time and data, I could have definitely improved upon this model as in F1 so much is unpredictable without the proper data. Crashes, car failures, and lap times are just not possible to predict without that data. However, the predictions of race outcome were fairly accurate, and I believe that with this position prediction, I can make my fantasy F1 team 10 times better.

What new skills did you pick up from this project? For example, had you used Jupyter Notebooks before? Did you encounter any weird bugs, twists, or turns in your dataset that caused issues? How did you resolve those issues?

I learned quite a bit about Random Forest Regressors and Classifiers, specifically how they work. As I mentioned earlier, I also learned about regression models and simple prediction algorithms, as I hadn’t really done anything like this in the past. I also had completely forgot how to use pandas and utilize dataframes in order to create models with ease. For CNNs and CV I mainly used NumPy. I encountered a few minor bugs in my data, the biggest being the type of data. Converting all the strings into integer values was difficult, as some of the values were DNF and \N. I had to figure out what a DNF was in regression values. Eventually, I just settled at 30.

What types of conclusions can you derive from the images/graphs you’ve created with your project? If you didn’t create charts or graphs and instead explored things like Markov Chains, how much work do you think you need to do to further refine your project to make its output more realistic?

I can conclude that there is some correlation between my model and the predicted outcome of races. I created a graph using MatPlotLib of the predicted values vs. the actual values, it ended up looking decent. However, besides that I didn’t really create any more graphs. I think a lot more work is necessary and a lot more data needs to go into the prediction using different metrics in order to make this actually accurate.

Did you create any formulas to examine or rate the data you parsed?

I utilised an r^2 value, an absolute error, and a mean error to rate my model. However, besides this, I didn’t create any formulas.

Please start with a full description of the nature of your first ML project.

My machine learning project rates teams’ offensive and defensive strengths and weaknesses. It also predicts game outcomes and season outcomes. It adjusts tempo-free stats using my own algorithm. It fits normal and skew normal distribution models using the scipy library. It predicts game outcomes by simulating values from the distributions. It predicts season outcomes with a combination of my own algorithms and a bracketology model trained on historic data with logistic regression from the scikit library. It simulates using a combination of distribution models assessing team strength, specific aspects of college basketball (like conference tournaments, NET rankings, quad resumes, NCAA tournament), and distribution models for the logistic regression.

What was your primary motivation to explore the project you completed?

I love college basketball and I wanted to make a stronger predictive algorithm with better available stats than what’s currently out there for free. I also wanted to include both men’s and women’s basketball, which is not widely available at all among college basketball advanced metrics. Certain stats in my model (like assist rate) and aspects of my simulations (like conference tournaments and NET readjustment) are not included in even some of the best models online.

Share some insights into what predictions you were able to make on the dataset you used for your project.

I was able to predict game outcomes and optimized betting lines per my algorithm (money line, point spread, and over under). I also predicted full season outcomes like conference tournament results and NCAA tournament results. Another feature that’s unique to my model is simulating season results conditional on game results to create a rooting guide for each team that shows who fans should root for in other games to boost their own tournament hopes.

What new skills did you pick up from this project? For example, had you used Jupyter Notebooks before? Did you encounter any weird bugs, twists, or turns in your dataset that caused issues? How did you resolve those issues?

I picked up statistics skills. I learned how to use machine learning to apply statistical modeling to real world data. I also learned about how to use algorithms that don’t overfit and correctly represent the real world situations they model. I spent a lot of time working on figuring out the best way to do my bracketology model to simulate how the selection committee really thinks.

What types of conclusions can you derive from the images/graphs you’ve created with your project? If you didn’t create charts or graphs and instead explored things like Markov Chains, how much work do you think you need to do to further refine your project to make its output more realistic?

I’ve looked at output from what my algorithm would’ve said at various points in the 2023–24 college basketball season, and I’ve gotten to see a lot about how the algorithm does at different points of the year as it learns more about each of the teams. I’ve looked at the lines that it creates for the games and bar charts for bid odds/tournament seed/round reached for conference tournaments and NCAA tournaments, as well as the rooting guide, and it’s really interesting knowing how things played out. I will be excited to see it in real time this season.

Did you create any formulas to examine or rate the data you parsed?

I used R2 to evaluate my bracketology model and recency bias model. Interestingly, despite a lot of models imposing stronger recency bias, my model found that recency is not important and the best way to predict future games is to treat all other results of the season equally.

Please start with a full description of the nature of your first ML project.

My first ML project is primarily an exploration into training classifier models on limited, or easily created datasets. The model at the core of the project is a pre-trained ResNet50 network with additional linear activation layers on top of it, finally converging to one sigmoid activation node with range 0-1. The primary dataset used were screen captures of Yae Miko, and Not Yae Miko (a character from the Chinese game Genshin Impact). In order to create the dataset, a preprocessing program was written to use OPENCV and filter out any frames without faces or bodies detected in a video, and compiling the past frames into a generated folder.

The model is also able to be trained with GPU devices, allowing for parallelization and the training speed to be increased a lot.

The final program operates completely from a CLI-based interface, and has nice features such as hyperparameter setting etc.

What was your primary motivation to explore the project you completed?

I really wanted to do something with Yae Miko and Machine Learning, so a natural first step was to let a Machine Learning program be able to recognize a Yae Miko.

Share some insights into what predictions you were able to make on the dataset you used for your project.

Overfitting was the primary issue because of the limited dataset. This resulted in more false negatives than desirable, but pleasantly, almost zero false positives. The result for validation on a separate dataset including other videos and photos of my own Yae Miko cosplay is a 99.2% accuracy.

What new skills did you pick up from this project? For example, had you used Jupyter Notebooks before? Did you encounter any weird bugs, twists, or turns in your dataset that caused issues? How did you resolve those issues?

I picked up the Python programming language for this project, and learned how to use workflow tools for it including containerization and best practices such as pre-commit for Python and using venvs.

In the first day of training, my mode kept diverging for no reason. I later found the reason to be a misshapen input size for ResNet; an error that wasted four hours.

What types of conclusions can you derive from the images/graphs you’ve created with your project? If you didn’t create charts or graphs and instead explored things like Markov Chains, how much work do you think you need to do to further refine your project to make its output more realistic?

There is definitely a visible result in the training loss chart that shows that such small datasets train and overfit extremely quickly, going to almost 1x10E-5 loss in only 20 epochs.

Did you create any formulas to examine or rate the data you parsed?

No, the pass/fail thresholds were defined arbitrary to be PASS for an output above 0.95 on an expected value of 1 and FAIL for a value of 0.05 on an expected value of 0.