Please use your reply to this blog post to detail the following:

- Please give a full description of the nature of your first AI/ML game project.

- What was the steepest part of the learning curve for this project? Was it learning how to implement the AI or how to use the Pygame/Pyglet library? Please elaborate and explain your answer.

- What went “right” with your project? As in, what worked seamlessly? What went “wrong” with your project? As in, what were your biggest hurdles or where did you have the most trouble debugging or getting your project to run?

- Describe the AI/ML algorithm your game implements. Did you work through a tutorial you found online? Did you start from scratch because you were motivated by a particular game or algorithm and you wanted to implement it using Pygame/Pyglet?

- If you had to teach this class next year, what project would you recommend to students in the Advanced Topics class to give them a broad and comprehensive overview of some fundamental AI algorithms to implement in a game?

- Include your Github repo URL so your classmates can look at your code.

Take the time to look through the project posts of your classmates. If you saw any project or project descriptions that pique your interest, please reply or respond to their post with feedback. Constructive criticism is allowed, but please keep your comments civil.

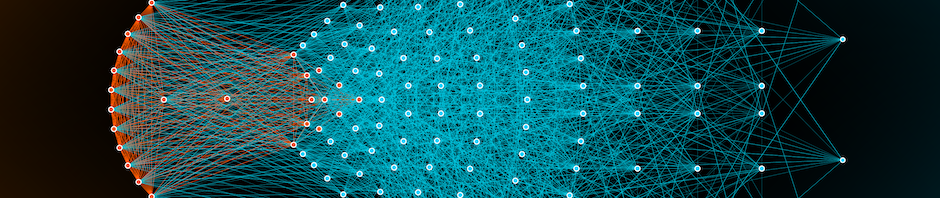

1) For this project, Anand and I worked together using a tutorial to create an automated snake game powered by reinforcement learning. To do so, we first swapped the ‘manual’ controls in the code for binary ones (essentially just a list with their states to represent straight, right, and left moves). Next, we created a function to determine the states relative to the current direction of motion (to reduce confusion in a sense for the AI by only giving it 3 possible moves rather than 4 for each direction). We also created a get states function to feed the AI data like where the obstacles are, where the food is, where the snake current is, and in what direction the snake is heading. Finally, we passed all of this data to a reinforcement learning algorithm with an input size of 11 (as there were 11 state data points), a learning rate of 0.001 (in an attempt to make the model more accurate at a sacrifice to efficiency), a hidden size of 256 (essentially the size of the neural network if I understand it correctly), and an output size of 3 (representing straight, left, and right). (We also tried to graph our learning data/rate, however it wouldn’t work for some reason)

2) The steepest part of the learning curve was attempting to figure out how exactly the clockwise algorithm worked for identifying the relative directions. Moreover, our code looks at and attempts to determine direction from a list containing states left, right, up, and down based on the new direction of motion (left, right, straight). However, after learning that -1 % 4 is 3 we realized how exactly a mod function could be used to create such an algorithm (thus by inputting index + 1 % 4 we can turn right and adjust the directions accordingly, and by inputting index -1 % 4 we can turn left and adjust the directions accordingly).

3) For the most part, because we were using a tutorial, almost everything went according to plan. That being said, there was an error with the file name/storage as the tutorial code referenced a premade file whereas ours needed to both create a file and reference/write to it. Although not technically an issue, we decided to make our game speed value far larger (from 20 to 1000) in an attempt to make the snake learn more efficiently as it was taking nearly 45 minutes to reach its ‘maximum’ value (in this case it would peak in the mid-80s in terms of its score, however ideally it would never ‘peak’ / stop learning, but we did not have time to fully troubleshoot this issue). Finally, we decided to change the reward/deficit code slightly in an attempt to not have the snake get into an infinite loop (where it was chasing its tale). To do so, we simply added an else if statement and set the reward/deficit to -100 instead of -10 for such a case scenario.

4) As I mentioned earlier, we used an online tutorial to implement a reinforcement learning algorithm (specifically a neural network-style reinforcement learning algorithm) (see question 1 for more).

5) We began our project by first following a tutorial for a pathfinder algorithm in which our agent traversed a simple maze. For this reason, I would recommend that future students pursue a similar baseline project such as a pathfinder algorithm to gain a better understanding of how the ‘reward’ system works for the AI as well as to explore/exploit rate. On a similar note, I would recommend that future students, if they decide to pursue a snake game as we did, conduct a bit more research into how torch files function as I am admittedly still a bit confused about that part of our code (to clarify I understand the premise of how its associated neural network functions however I feel there is a lot of behind the scenes work that the torch files are allowing for that I still cannot fully explain).

6) https://github.com/GMebane525/Snake.git

1) This project was creating an agent using reinforcement learning and neural networks that was placed into a Snake Game. We used a tutorial to accomplish this. The first thing we had to do was change some things in the actual game code. For example, we wanted to change the snake from having 4 potential moves (left, right, up, down) to having only 3 (straight, left, right). Next, we wanted to create the input for our neural network: the states. There were 11 different states, consisting of the 3 different directions the snake could be moving in, the 4 different directions danger could be (up, down, left, right) and the 4 different places food could be (up, down, left, right). These state values are then passed into the neural network to give us 3 different outputs, which determine what direction the snake should move in.

2) The steepest part of the learning curve for me was just understanding how exploration vs exploitation, and reinforcement learning in general, works. I still don’t understand the nitty gritty, such as why our “hidden input” value for the neural network was 256 (YT tutorial video said it could basically be any value we wanted), but I now understand the general idea behind reinforcement learning. Also, a lot of our data was being stored in tuples, so understanding what a tuple is and what its function is was a big learning curve for me as well, as I had never interacted with them before.

3) There was a lot of stuff that worked right in our code. The agent was able to play the game, and it was obvious that it was learning. In the long run, the scores seemed to be getting better and better. There was some stuff that went wrong, though. We were supposed to be graphing the mean score compared to the # of games played so that we could see just how much the agent is learning. We can get a sense of it by just manually looking through the list of scores, but it would be a lot better if there was a graph. Also, since the tutorial was done in a manner where the guide coded for an hour straight without ever testing the code, we were left with quite a few typos and errors across the code that we had to go back through and sort. We also had one error where we had to get ChatGPT to help solve.

4) Yes, we used a tutorial from youtube.

5) I would highly recommend doing a more straightforward agent implementation before this, like a maze game. This is essential to gaining a basic understanding of what a neural network and reinforcement learning does, learning about exploration vs. exploitation, learning rate, and a host of other things. As Gil said in his response, I would highly recommend research into torch files, tuples, and tensors, because they seem to be used a whole lot.

6) https://github.com/anandjss/SnakeGame.git